Scaling

Let's start with a general definition of scalability, the types, and why it is useful.

Scalability is the ability of a system to cope with increased load by adding resources. In Distributed Systems the ability to add resources such as CPUs, memory and storage to the existing nodes is known as vertical scaling (up/down), while horizontal scaling (out/in) refers to the ability of adding additional nodes to the system.

Following paragraphs discuss the common concepts for applying horizontal scaling (e.g. scaling out or shared nothing) to streaming data pipelines.

Stream processing in Spring Cloud Data Flow is implemented architecturally as a collection of independent event-driven streaming applications that connect using a messaging middleware of choice, for example RabbitMQ or Apache Kafka. The collection of independent applications come together at runtime to constitute a streaming data pipeline. The ability of a streaming data pipeline to cope with increased data load depends on the following characteristics:

- Messaging Middleware - data partitioning is a widely used technique for scaling the messaging middleware infrastructure. Spring Cloud Data Flow through Spring Cloud Stream, provides excellent support for streaming partitioning.

- Event-driven Applications - Spring Cloud Stream provides support for data processing in parallel with multiple consumer instances, which is commonly referred to as application scaling.

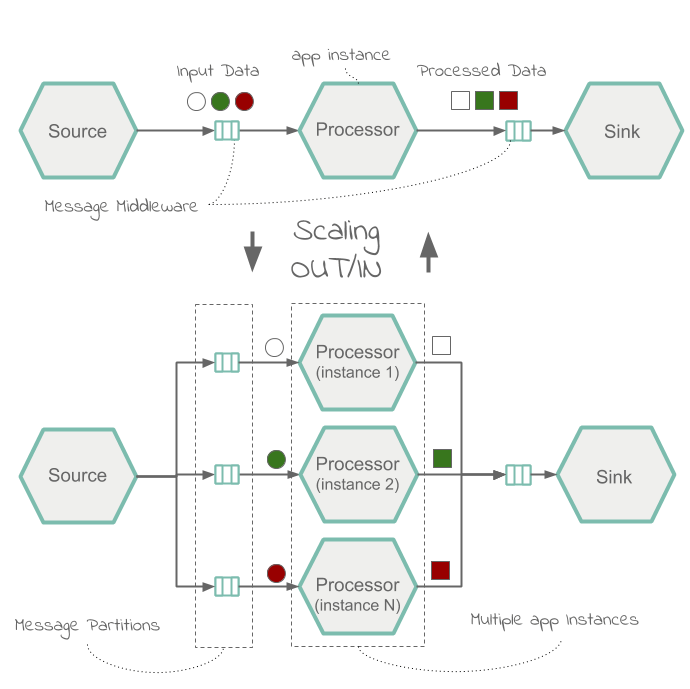

Following diagram illustrates a typical scale-out architecture based on data partitioning and application parallelism:

Platforms such as Kubernetes and Cloud Foundry offer scaling features for the Operators to control system’s load. For example, Operators can leverage cf scale to scale applications in Cloud Foundry and likewise, use the kubectl scale to scale applications in Kubernetes. It is also possible to enable autoscaling features with the help of App Autoscaler in Cloud Foundry and HPA or KEDA in Kubernetes respectively. The autoscaling is usually determined by the CPU or memory limit thresholds or message queue-depth and topic-offset-lag metrics.

While the scaling happens outside of the Spring Cloud Data Flow, the applications that are scaled can react to and handle the upstream load automatically. Developers only need to configure the message partitioning using properties such as partitionKeyExpression and partitionCount.

In addition to the platform specific, low-level APIs, Spring Cloud Data Flow provides a dedicated, Scale API, designed for scaling data pipelines. The Scale API unifies the various platforms native scaling capabilities into a uniform and simple interface.

It can be used to implement scaling control based on specific application domain or business logic.

The Scale API is reusable across all the Spring Cloud Data Flow supported platforms. Developers can implement an auto-scale controller and reuse it with Kubernetes, Cloud Foundry or even with the Local platform for local testing purposes.

Visit the Scaling Recipes to find how to scale applications using SCDF Shell or implement autoscale streaming data pipelines with SCDF and Prometheus.